Top practices to follow for sustainable test automation

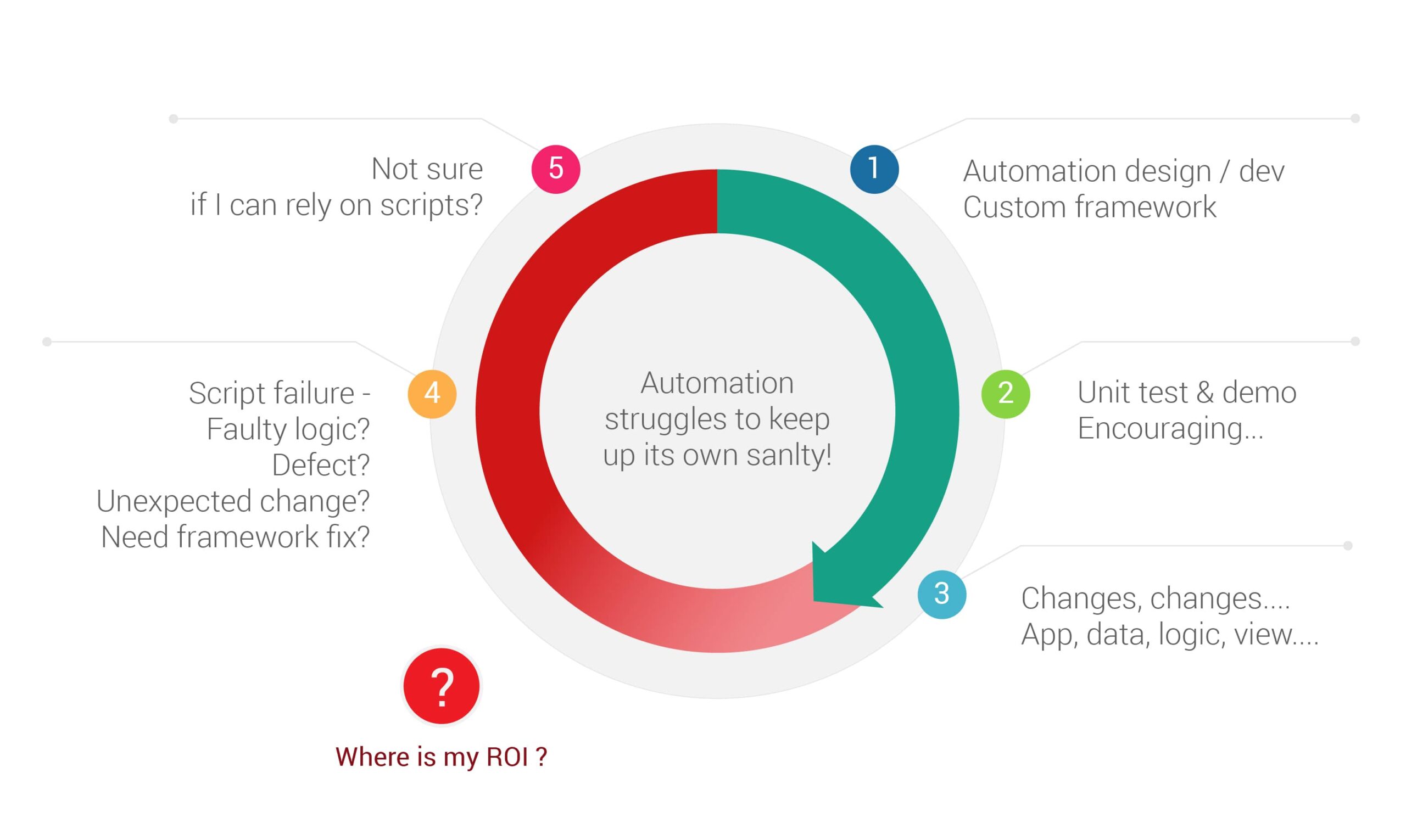

Lack of sustainability is on top of test automation debacles across the industry. Testing teams get pulled into the vicious cycle of automation maintenance without being able to reap the benefits of the exercise. While teams keep playing catch-up to keep scripts intact, the objective takes a backseat. While test automation has become a game-changer in the above context, enabling developers to streamline their testing processes, continued sustainability efforts will help catch bugs proactively while delivering superior user experiences.

Top test automation strategies

Here are some practices that can help achieve sustainable test automation.

1. Choose smart test scripts and keep them simple

There is enough complexity in the application under test. A lot of times, it feels technically fulfilling to over-optimize scripts and complicate test scenario coding. Breaking down the scripts to achieve one simple task at a time is okay. If multiple data variations for the same use case navigate the application through different paths, they may be considered for separate scenarios. Use cases that may seem somewhat related might take their course as the application changes. Rather than inserting a whole lot of logic-based statements, consider simplifying. Further, It is essential to choose the proper test scripts, and it is imperative to include:

- Regression tests identify unexpected bugs in existing functionality caused by code changes. Automating regression tests helps swiftly detect issues when the software is deployed.

- Smoke tests or sanity tests help verify basic application functionality and check core functionalities and critical workflows. Automating smoke tests can help teams with quick, routine assessments of application stability. The rapid feedback loop helps resources avoid potential mishaps in early development, preventing significant issues.

- Data-driven tests, which efficiently validate multiple input scenarios. These tests enable assessing the software against a broad spectrum of systematically varying inputs, helping uncover edge cases and unexpected behavior that might remain unnoticed.

- Automating complex manual test cases, such as those that involve intricate workflows, complex data combinations, or challenging scenarios, ensures a consistent and precise execution that enhances the accuracy of testing.

2. Amplify test coverage and reliability through data-driven tests

The main benefit of data-driven testing lies in its adaptability. This approach uses tests to mimic various user interactions, responses, and inputs. This diverse range of inputs brings multiple hidden issues to light—bugs that might otherwise remain unnoticed in standard test scenarios. By validating the software against many inputs, it is possible to fortify its resilience and boost the likelihood of uncovering edge cases that could disrupt user experiences. In summary, data-driven testing empowers testers to achieve a more thorough and reliable testing process.

3. Synchronization between virtual and real-world scenarios

One of the most common reasons for automation test failures is the lack of proper synchronization. When an application's functionality and performance are tested across different devices, accurate device testing is inevitable. While virtual environments are convenient, they cannot replicate the nuanced intricacies of actual devices. Virtual environments might accurately mimic certain aspects of device behavior; they can miss out on critical subtleties that arise due to device-specific variations. Therefore, integrating both these worlds is mandatory to ensure a comprehensive validation process.

4. Do not automate everything; enhance automated testing through division

One key strategy for optimizing the automated testing process is distributing testing tasks to team members based on their skills. By harnessing the unique perspectives of developers, testers, and domain experts, implementing a more thorough and effective testing coverage is possible, leading to better software quality. When testing tasks based on strengths, it is possible to uncover a detailed understanding of the inner working mechanism of an application. While testers bring a critical eye for detail and a knack for finding vulnerabilities, domain experts hold valuable context about user needs and industry requirements. Leveraging these varied skills sets up a system of testing that covers all bases. Avoid automating corner cases that require disproportionate effort; they can be handled by manual testing.

5. Select an optimal framework for automating the testing process

The proper testing framework plays a pivotal role in determining the efficiency and effectiveness of automated testing efforts. Consider the following before choosing one:

- It must seamlessly align with the application’s technology stack. The testing solution should complement these components if the software relies on a specific set of programming languages, libraries, or frameworks.

- The testing framework should function seamlessly across various mediums/operating systems. Whether desktop/mobile/web, the solution should adapt and mimic real-world scenarios, yielding more comprehensive results.

- The proper framework that seamlessly aligns with the team’s expertise helps them hit the ground running and extract maximum value from the platform.

- Factor in the immediate and potential long-term expenses associated with the chosen framework. While a high upfront cost might be justified if the platform helps boost productivity and quality, a cheaper option might incur hidden maintenance, support, and scalability costs.

- Choose a tool that complements the efforts to address framework requirements.

6. Efficient debugging using detailed records

Detailed documentation of automated test executions, their results, and identified defects creates the backbone of a robust testing strategy. These records of various steps, actions, and outcomes contribute to the bigger picture when running an automated test. Capturing test interaction during the test execution creates a chronological record of events, which becomes an indispensable asset during troubleshooting. Detailed records contribute to a historical overview of testing endeavors and reveal patterns and trends across various test runs, indicating recurring issues or systemic irregularities. This empowers teams to address the underlying problems before escalating, improving software stability and reliability.

7. Early and regular testing helps with proactive quality assurance

Initiating automated testing in the SDLC as early as possible generates a lot of benefits. Identifying bugs early can save valuable time, resources, and efforts that would otherwise be spent on resolving these issues downstream. Regular testing iterations reinforce the importance of consistent evaluation. Automated tests that run frequently provide a continuous feedback loop, highlighting deviations and changes in the application. This iterative approach helps detect regressions promptly, track improvements, and ensure that the quality of the application is stable throughout development.

8. Focus on comprehensive and high-quality test reporting

Detailed and well-structured test reporting is a treasure trove of insights that provide a window into the application's behavior, performance, and stability. These reports document the intricate details of test executions, highlighting rights/wrongs and the context of these occurrences. Resources can easily discern patterns, trace the evolution of issues, and understand the impact of changes on the application's behavior.

Do more with Test Automation

Discover more ways to add ‘low-code no-code‘ test automation in your workflows

Conclusion

In the ever-evolving software development landscape, test automation has become a necessity. Incorporating these strategies into your testing regimen ensures robust functionality, makes the exercise sustainable, and ultimately delivers superior user experiences.

ACCELQ is a cloud-based continuous testing platform for functional and API testing needs. With its natural language-based interface for test development, ACCELQ is built for real-world challenges in test automation. Best Practices are embedded at every stage of the process, and script resilience is ensured with autonomics-based self-healing techniques. Sign up for a free trial and learn more about how ACCELQ can complement your efforts in building a sustainable test automation suite.

Mahendra Alladi

Founder & CEO at ACCELQ

Mahendra Alladi, a renowned entrepreneur in the ALM domain, is the driving force behind ACCELQ, a solution addressing real-world ALM challenges. Prior to ACCELQ, he successfully exited Gallop Solutions. He's an alumnus of the Indian Institute of Science, Bangalore, and topped the national Graduate Aptitude Test for Engineers.

Discover More

What is Azure DevOps and how is it used? Why automate Azure DevOps?

What is Azure DevOps and how is it used? Why automate Azure DevOps?

What is Azure DevOps and how is it used? Why automate Azure DevOps?

Perils of Record and Playback Test Automation

Perils of Record and Playback Test Automation