Machine learning and AI are prevalent in software products and services, and we must establish best practices and tools to test, deploy, manage, and monitor ML models in real-world production. Keeping it short, with MLOps, we seek to avoid “technical debt” in machine learning applications. This article tries to give the user a thorough understanding of MLOps Testing and underline its importance in the Machine Learning lifecycle.

Almost every organization tries incorporating AI/ML into its products/services. Companies' new requirement of building ML systems adds/reforms some ideas of the traditional DevOps process to give rise to a new engineering discipline called MLOps and try to incorporate Data Engineers and Data Scientists.

What is MLOps

MLOps has defined a set of practices for collaboration and communication between data scientists and operations professionals. Applying these practices increases the quality, facilitates the management process, and automates the deployment of Machine Learning/Deep Learning models in production environments. As a result, it's easier to align models with business needs and regulatory requirements.

An optimal MLOps experience is where Machine Learning assets are treated consistently with all other software assets in a CI/CD environment. ML models can be deployed alongside the services that wrap and consume them as part of a unified release process. Some key points of MLOps are Iterative-Incremental Development, Automation, Continuous Deployment, Versioning, Testing, Reproducibility, and Monitoring are critical concepts described in MLOps.

MLS is gradually maturing into an independent approach to ML lifecycle management. It applies to the entire lifecycle of data gathering, model creation (Agile software development cycle, CI/CD), orchestration, deployment, health, diagnostics, governance, monitoring, and business metrics.

SUGGESTED READ - Taking your Test Automation to the next level with AI and ML

MLOps Model

The complete MLOps process includes three broad phases "Designing the ML-powered application," "ML Experimentation and Development," and "ML Operations."

The "Design" phase is devoted to business understanding, data understanding, and designing the ML-powered software. In this step, we identify our potential users, create the machine learning solution to solve its problem, and assess the project's further development.

The next phase, "ML Experimentation and Development," is dedicated to verifying the viability of ML for our problem by implementing a PoC for ML Model. We run iteratively different actions, such as identifying or polishing the suitable ML algorithm for our problem, data engineering, and model engineering. This phase aims to deliver a stable quality ML model we will run in production.

Finally, the "ML Operations" phase is dedicated to delivering the previously developed ML model in production using established DevOps practices such as Testing, versioning, continuous delivery, and monitoring. All three phases are interconnected and affect each other. For example, the design decision during the design stage will propagate into the experimentation phase and impact the deployment options during the final operations phase.

MLOps vs. AIOps

The "AIOps" concept is often used interchangeably with "MLOps," which is entirely incorrect.

AIOps is all about real-time supporting and reacting to its issues and providing analytics to your operations teams. These functions include performance monitoring, event analysis, correlation, and IT automation. According to Gartner, AIOps combines big data and machine learning to automate IT operations processes. Hence, the end goal of AIOps is to automatically spot issues during IT operations and proactively react to them using Artificial Intelligence. Research shows that 21% of organizations plan to adopt AIOps the following year.

On the other hand, MLOps focuses on managing training and testing data required to create machine learning models, as we identified below. It is all about monitoring and management of ML models. It focuses on the Machine Learning operationalization pipeline. AIOps is about applying cognitive computing techniques to improve IT operations, but it is not to be confused with MLOps.

MLOps and AIOps aim to serve the same end goal, i.e., business automation. However, while MLOps bridges the gap between model building and deployment, AIOps focuses on determining and reacting to IT operations issues in real-time to manage risks independently.

Be part of the lively group discussions covering various topics around Web, API, Mobile Test automation, and more...

Testing and MLOps

Related to Machine Learning Testing, we must involve different checks to ensure that learned logic consistently produces our desired behavior. Therefore, many automated and functional tests can add value and enhance the overall quality of our ML models. The purpose of an MLOps team is to automate the deployment of ML models into the heart software system or as a service component. It implies automating almost the entire ML-flow steps with minimal manual intervention.

Automated Testing helps discover problems fast and in the early stages. Doing this enables immediate fixing of errors and learning from mistakes. We must provide specific testing support for catching ML-specific errors.

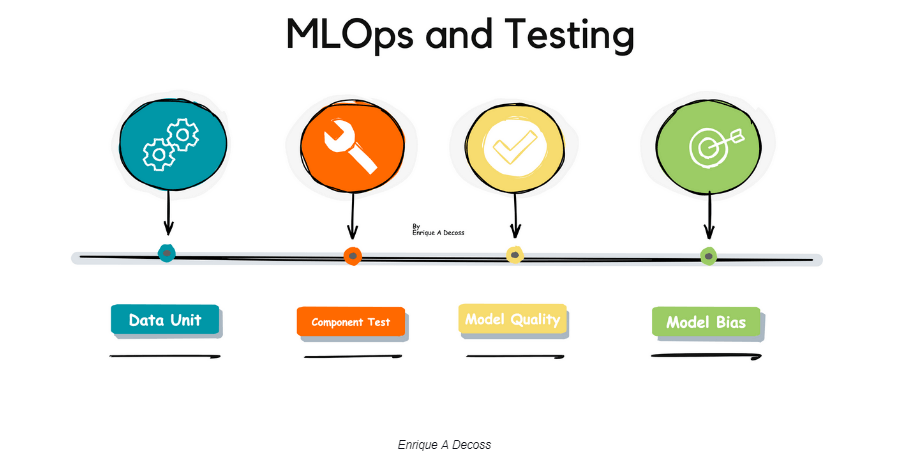

Data Unit test

Data tests permit us to quantify model performance for specific cases in our data; this can aid in identifying critical scenarios where prediction errors lead to critical error analysis. Finally, automated tools introduce slicing functions that allow us to identify subsets of a dataset that meet specific criteria. Keep in mind Automatic check for data and features schema/domain.

Component integration test

For some Data Scientists or Data Engineers, the pain point is that the integration is more end-to-end. However, by working end to end, Data Scientists will have the full context to identify the correct problems and develop usable solutions. Regarding integrating different services, Contract Testing can validate the model interface's compatibility with the consuming ML API application.

Model Quality

For ML Quality, we can use Threshold Tests or the Ratcheting approach to guarantee that new models don't degrade against a known performance baseline (A straightforward way to pick a threshold is to take the median predicted values of the positive cases for a test set). Remember that model performance is non-deterministic, but ensuring our models don't exceed the % error rate previously defined is crucial. Other areas for ML quality should include ML API stress testing; The ML model should restore from a checkpoint after a mid-training crash

Model bias/fairness/Inclusion testing

It is also crucial to check how the model performs against baselines for specific data sets. For example, we might have an inherent bias in the training data where there are many more data points for a produced value of a feature (e.g., gender, age, or region). Sometimes, we should collect more data that includes potentially under-represented categories (Tour of Data Sampling Methods for Imbalanced Classification).

Ready to Get Started?

Let our team experts walk you through how ACCELQ can assist you in achieving a true continuous testing automation

Final Thoughts

MLOPs could be complicated to test since they involve many areas to check. Nevertheless, Testing is still essential for ML high-quality software systems. Moreover, these tests can give us a behavioral report of trained models, which can serve as a well-organized strategy for error analysis and improve our automation to deliver faster.

There is a difference between developing the path and Testing the way. Both must coexist; contrarily, it would be easy to end up off the track - Enrique A Decoss.

Currently, ML models depend on a large amount of "traditional software development" to process data inputs, create feature representations, conduct data increment, arrange model training, and expose interfaces to external systems. The complete ML development pipeline includes three levels where changes can occur: Data, ML Model, and Code. It means that in machine learning-based systems, the trigger for a build might be the combination of a code change, data change, or model change.

Effective Testing for MLOps requires a maturity level of automation; we must work directly with Data Scientists, Data Engineers, Testing, and Ops to provide high-quality ML Models. Testing does not kill innovation; it improves those innovations.

Happy Bug Hunting!

Enrique DeCoss | Senior Quality Assurance Manager | FICO

Enrique is an industry leader in quality strategy with 17+ of experience implementing automation tools. Enrique has a strong background in web Tools, API testing strategies, performance testing, Linux OS and testing techniques. Enrique loves to share his in-depth knowledge in competencies including, Selenium, JavaScript, Python, ML testing tools, cloud computing, agile methodologies, and people Management.

Related Posts

What Is Static Code Analysis? Types, Tools and Techniques

What Is Static Code Analysis? Types, Tools and Techniques

What Is Static Code Analysis? Types, Tools and Techniques

Top 10 Cloud Testing Tools and Services In 2025

Top 10 Cloud Testing Tools and Services In 2025