While DevOps, Engineering, and QA leaders can often provide a preliminary assessment of their team’s performance, some organizations struggle to quantify the real value to the business or pinpoint where and how improvements can be made. Multiple metrics can help by accurately measuring and optimizing software delivery performance and validating the business value.

Optimizing Test Automation to provide a better lead time to market is not new; many have sought ways to measure. One determination everyone seems to agree with is: to improve something; you must be able to define it, split it into critical elements, and then measure those (I prefer the old one; if you can measure it, you can improve it)—however, opinions on what to measure and how may vary from here onwards. For example, test Automation velocity and coverage may each be essential components, but perhaps those are not providing any real value.

Ready to Get Started?

Let our team experts walk you through how ACCELQ can assist you in achieving a true continuous testing automation

Specifying the Success in Test Automation

I've heard so many times about certain companies wanting to be successfully doing or implementing automation testing. And I've seen those failing for many reasons, such as high cost to maintain, flaky tests, long time to see results, no right Automation strategy, lacking test automation candidates, among others. Keep in mind Automation is not just a QA effort; we must involve all areas as a Development process.

Choosing the right Test Automation approach for your company often comes down to having the right ingredients (Right tool, Right People, Right Process, Right infrastructure, etc.) and knowing how to combine them. That, at least, is how I visualize the Test Automation effort mixing the ingredients and considering the kind of problems automation testing is good at solving for your current business.

In terms of defining the success of any Test Automation, how is that possible using the old formulas? ROI, % of time saved, number of defects identified by your test scripts, % of Manual effort reduced, etc. Is it possible to predict success using those numbers? The only significant value to measure is the value to our customers and positively impacting the business with our Automation Testing process; I'll try to elaborate more in this post.

Accelerate Metrics

The authors of the Accelerate book published in 2018 are Dr. Nicole Forsgren, Jez Humble, and Gene Kim. They tried to figure out what made high-performing teams different from low-performing teams. For example, software engineers' primary goal is quick delivery and high-quality code.

These authors analyzed 23,000 data points from companies all around the world. The companies ranged from startups to significant enterprises. Their study uncovered four key (Accelerate Metrics) metrics that serve as solid performance indicators of teams with high rates of sales, customer satisfaction, and efficiency.

- Deployment frequency -> How often do we deploy to production?

- Lead time for changes -> How long does it take for a commit to be running in production?

- Mean time to restore -> How long does it take us to restore from a failure in production?

- Change failure rate -> How often do our deployments fail in production?

The four Accelerate metrics reflect core capability categories that they identified as essential to software delivery performance:

- Continuous Delivery/Continuous Testing

- Architecture

- Product and Process

- Lean Management and Monitoring

- Cultural

The metrics mentioned above are technology agnostic and equally valid for a small startup technology team or multiple value-stream-oriented teams in large complex enterprises. When these four metrics are used together, they're powerful performance drivers. Teams cannot consciously or unconsciously game their metrics by raising one while another suffers. But, of course, teams need to be able to optimize themselves.

It is essential to use them in combination. Using any single metric in isolation may not provide the desired value. For example, measuring the change rate without the change failure rate could result in poor-quality changes impacting service levels and customer experience. On the other hand, counting only the time between failures without the time to restore could result in infrequent but high-impact downtime through encouraging "big bang" deployment strategies.

The DORA Metrics initially published in 2020, the DORA team added a fifth metric — reliability

Measure what matter in Automation Testing

We mentioned the Accelerate metrics, but where is the connection between Test Automation and the value to our customers? The first two lean toward the specific Engineering goal of delivering software, and test automation is key to deployment frequency and lead time change. For instance, if we want to increase the deployment frequency, automation testing is crucial; related to reducing the lead time for a change or uncovering bugs, test automation must be in place.

Now that we understand our goals for Automation Testing, increasing our deployment frequency, and accelerating lead time to change, the question remains: How can we track our test automation effort? Many people will mention tracking the value through your customer, but without numbers, that could be a pure perception. We need numbers; numbers are good, but we need to do something with those numbers; as Chris Hughes mentioned, the best way to listen to the customers is through metrics.

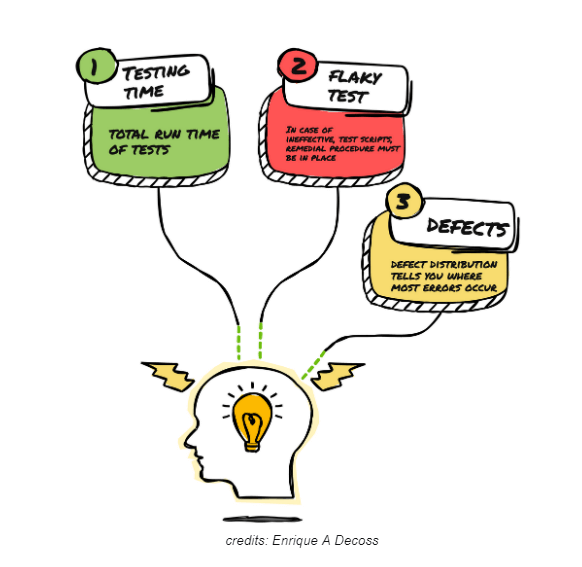

Testing Time

This metric accounts for the total run time of tests (In this case, our Test Automation scripts). The goal of adoption is to accelerate the testing process. If automated tests take too long to run, they require review. This number can also be compared directly to the release cycle to see testing automation's impact on our deployment frequency.

Flaky Test

Our script is essential when we are running automation. After all, if it's ineffective, the test won't work. Unfortunately, a high number of script failures means our team likely needs remedial training on creating appropriate test scripts for software testing.

Defect Distribution

Defects are rarely evenly distributed throughout your whole development lifecycle. Instead, defect distribution tells you where most errors occur so you can fix the most damaging problems first (Unit Test, Functional Testing, E2E). It also lets you measure how effectively our test automation identifies high-impact issues.

Final Thoughts

The metrics give valuable insights into a team's automation testing process's good, bad and ugly aspects. For example, they measure agility and productivity and how often the value is delivered to the user. They also track the stability and effectiveness of a team's software development process.

Measure the actual value of your test automation considering the above-mentioned aspects and, most notably, the ability to deliver high-quality software to our customers quickly.

Happy Bug Hunting!

Enrique DeCoss | Senior Quality Assurance Manager | FICO

Enrique is an industry leader in quality strategy with 17+ of experience implementing automation tools. Enrique has a strong background in web Tools, API testing strategies, performance testing, Linux OS and testing techniques. Enrique loves to share his in-depth knowledge in competencies including, Selenium, JavaScript, Python, ML testing tools, cloud computing, agile methodologies, and people Management.

Related Posts

Take your API Testing to Regression maturity in 3 Steps

Take your API Testing to Regression maturity in 3 Steps

Take your API Testing to Regression maturity in 3 Steps

Test automation + transformation: it’s all about the people

Test automation + transformation: it’s all about the people